Salesforce Zero-Data Retention & Data Masking in the Einstein Trust Layer

Securing Salesforce Against Generative AI LLMs

Mark Runyon | June 20, 2024

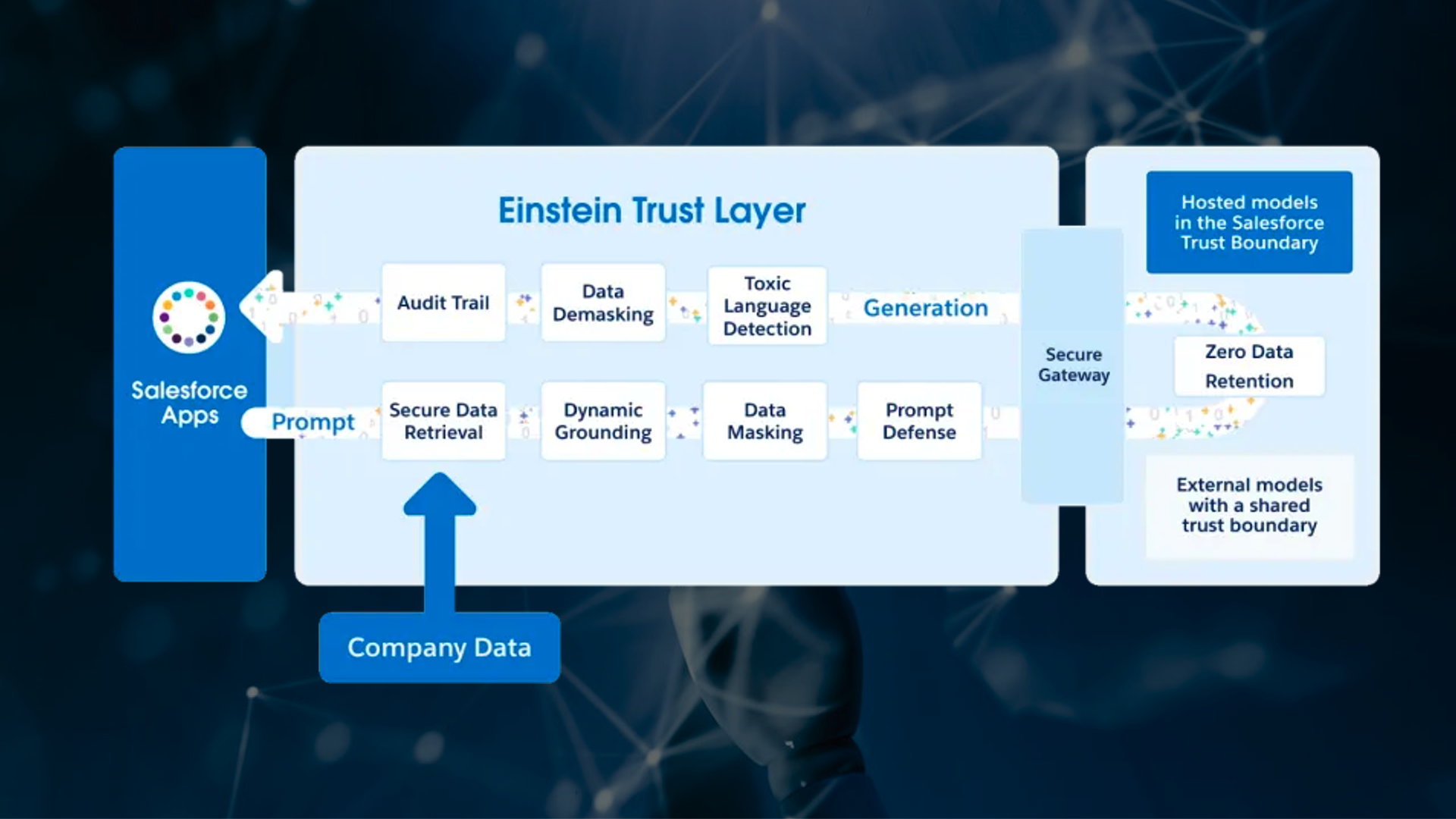

I'm sure you've heard about Einstein AI and all the cool things that instantly become possible in Salesforce once we infuse it with AI. Although it's exciting, we need to approach AI with caution. We need to ensure our corporate data stays safe and doesn't become training data for generative AI's large language models (or LLMs). Thankfully, the zero-data retention policy and data masking are steps in the Einstein Trust Layer to protect our Salesforce data. Let's look at how this works.

How LLMs Work

Popular Generative AI tools, like Open AI's ChatGPT, collect your data and use it to train their model to make it better. If you are asking ChatGPT for a killer margarita recipe, there isn't much to be concerned about. The problem comes when you feed it personally identifiable information (PII) and intellectual property. That sensitive data becomes part of the training pool, and it also becomes at risk should Open AI get hit with a data breach. Many companies have banned their employees from using generative AI tools due to this risk. Now think about Salesforce interacting with these tools. Lots of personal information could be at risk.

Zero-Data Retention

Enter the Einstein Trust Layer. It implements a set of data and privacy controls to keep your company's data safe when interacting with 3rd party generative AI tools. Salesforce has partnered with Open AI and Azure Open AI to establish zero data retention policies. This means your Salesforce data will never be used for LLM model training. It will not be retained by these 3rd party LLMs. Also, no human being, on their side, will ever see this data. This goes for both the prompt you send to the LLM as well as its response you get back. Essentially, we are erasing any evidence that this interaction ever took place.

Data Masking

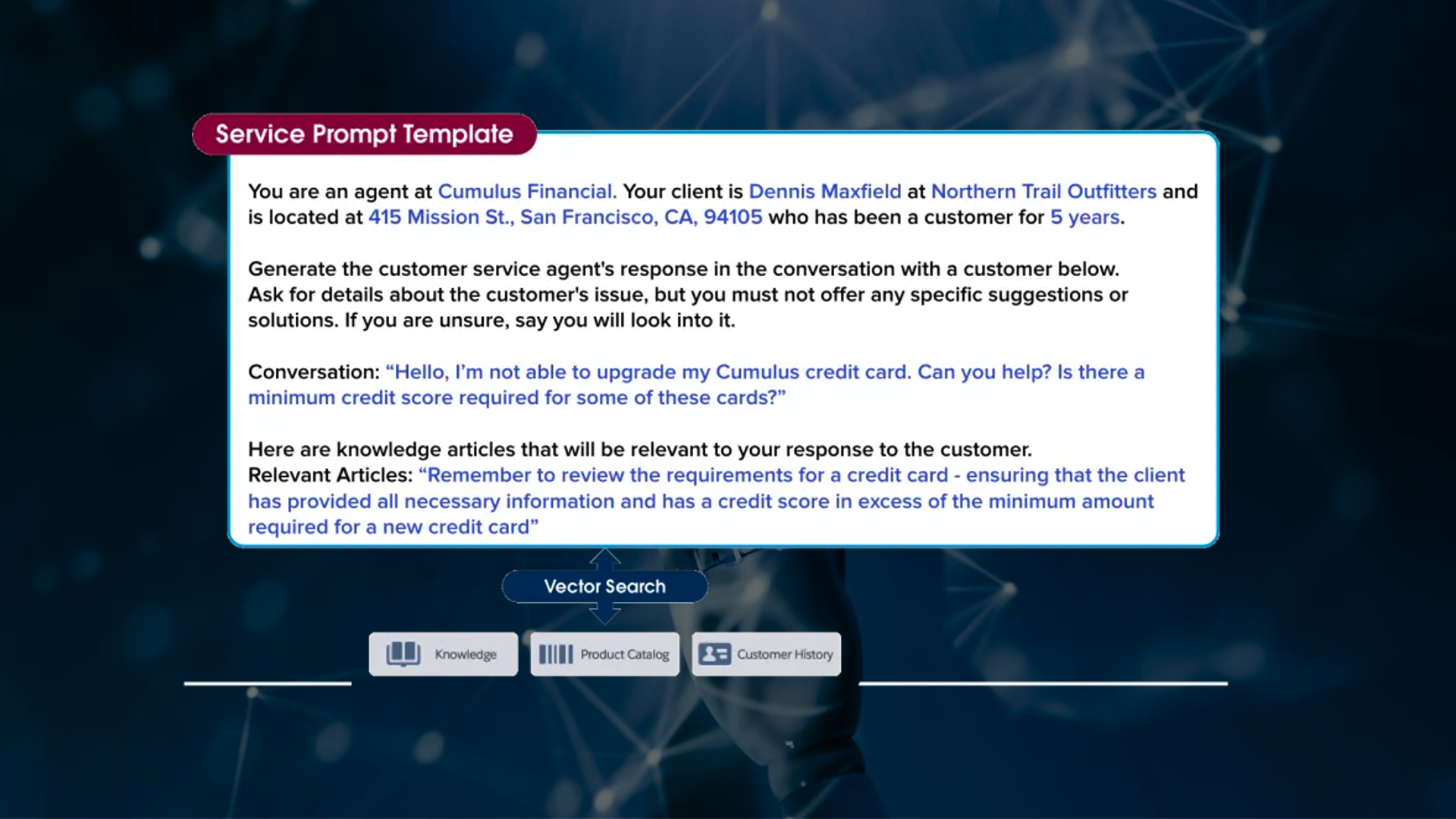

Still not trusting agreements forged between Goliath companies. Before your prompt is ever sent over the secure gateway to Open AI, Salesforce sanitizes that data by removing sensitive information and replacing it with anonymized data through a process called data masking. All Open AI sees are generic identifiers. Once it receives the response from the LLM, Salesforce reverts those identifiable fields to perform the necessary data unmasking so your returned response looks right.

What About Content & Abuse Moderation?

Open AI is actually doing something useful when it retains your data. Beyond enhancing their model, they use the prompt and response data to look for prompt injection attacks and to perform content moderation. In the Einstein Trust Layer, that is still happening, but the responsibility shifts to Salesforce to handle that policing.

Zero Data Retention and Data Masking are just two of the tools that the Einstein Trust Layer uses to facilitate secure communications between 3rd party LLMs and Salesforce. We'll explore other features in future articles so please check back in the weeks ahead.